A Modular Approach to Reproducible Research

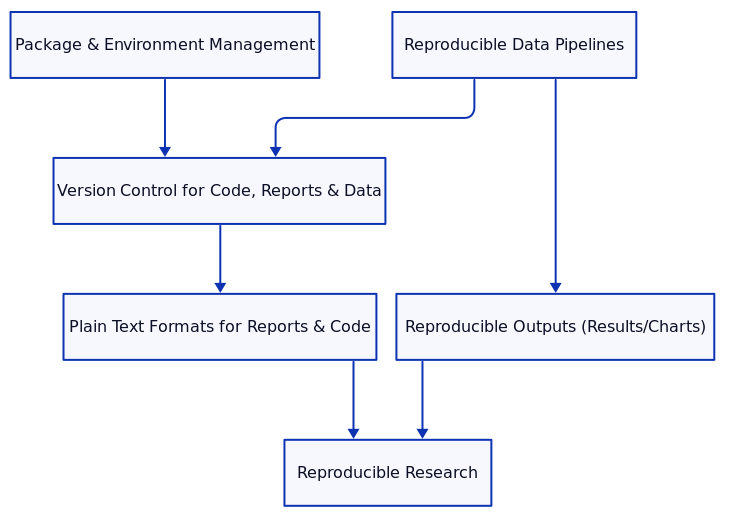

Reproducibility is a cornerstone of scientific progress, ensuring that research results can be replicated and verified by others, as well as by the original researchers at a later time. By breaking down research practices into distinct modules, researchers can address key challenges that arise in achieving reproducibility. This blog covers essential modules for ensuring reproducibility: package and environment management, reproducible data pipelines, version control for code, reports, and data, reproducible output, and the advantages of plain text formats.

1. Package Version and Environment Management

Managing package versions and environments ensures that your code runs identically across different systems, independent of updates or changes in dependencies. Tools such as Mamba, Pixi, and UV allow researchers to create isolated environments that lock down specific versions of software libraries and tools.

Why Environment Management?

Reproducibility breaks down when code that once worked suddenly fails due to updated dependencies. By using environment managers, you can “freeze” the environment configuration so others can recreate it exactly.

Example: Using Mamba for Environment Management

# Create an environment with specific package versions

mamba create -n research_env python=3.9 numpy=1.21 pandas=1.3 matplotlib=3.4

# Activate the environment

mamba activate research_env

# Export the environment configuration

mamba env export > environment.yml

# Recreate the environment from YAML

mamba env create -f environment.ymlBy sharing the environment.yml file, anyone can recreate the same environment, ensuring that code runs identically.

2. Reproducible Data Pipelines

After setting up the environment, the next step is ensuring that data processing pipelines produce consistent results. A reproducible data pipeline ensures that given the same raw data and the same processing steps, the outputs will be the same.

Why Reproducible Data Pipelines?

Data manipulation, cleaning, and transformation steps can introduce variability if not well-structured. Using pipeline automation tools such as Snakemake or Nextflow allows researchers to define and automate the data processing workflow.