Making Large Language Models Faster and More Energy Efficient with BitNet and bitnet.cpp

Large Language Models (LLMs) are becoming increasingly strong, but they also demand more computing power and energy. Researchers have created BitNet and its supporting framework, bitnet.cpp, to tackle these obstacles, providing a more intelligent approach to executing these models. In this article, we will explain the purpose of this innovative technology and how it can be advantageous for all individuals, particularly those utilizing AI on their personal devices.

What is BitNet?

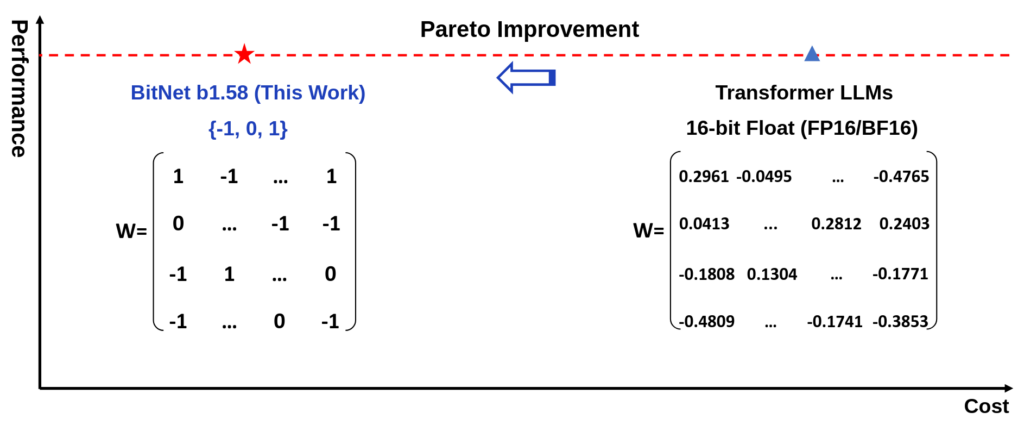

BitNet is a form of LLM that operates with data at either 1-bit or 1.58-bit accuracy. This means it saves and processes compressed data formats rather than high-precision numbers. Consider it as shorthand writing, conveying the same message with fewer symbols. Lower precision enables faster model performance and reduced energy consumption without compromising output quality.

What is bitnet.cpp?

bitnet.cpp is the program structure created to effectively operate these 1-bit LLMs on common devices, such as laptops and desktops. The structure enables big models to run on standard CPUs instead of needing costly GPUs. This simplifies the use of AI on local devices, including those not designed for machine learning.

Why Should You Care About 1-Bit AI?

Efficiently operating LLMs offers a number of advantages such as-

- Quicker AI replies – Say goodbye to waiting for lengthy calculations.

- Energy conservation – Beneficial for mobile devices like laptops and phones, especially important for extending battery longevity.

- On-device AI – No need for cloud dependence to operate complex models, improving privacy and accessibility.